Articles

Article Tools

Stats or Metrics

Article

Original Article

Exp Neurobiol 2019; 28(2): 261-269

Published online April 30, 2019

https://doi.org/10.5607/en.2019.28.2.261

© The Korean Society for Brain and Neural Sciences

The Influence of Anxiety on the Recognition of Facial Emotion Depends on the Emotion Category and Race of the Target Faces

Wonjun Kang1,†, Gayoung Kim1,†, Hyehyeon Kim1, and Sue-Hyun Lee1,2*

1Department of Bio and Brain Engineering, College of Engineering, Korea Advanced Institute of Science and Technology (KAIST), Daejeon 34141, Korea.

2Program of Brain and Cognitive Engineering, College of Engineering, Korea Advanced Institute of Science and Technology (KAIST), Daejeon 34141, Korea.

Correspondence to: *To whom correspondence should be addressed.

TEL: 82-42-350-4311, FAX: 82-42-350-4310

e-mail: suelee@kaist.ac.kr

†These authors contributed equally

Abstract

The recognition of emotional facial expressions is critical for our social interactions. While some prior studies have shown that a high anxiety level is associated with more sensitive recognition of emotion, there are also reports supporting that anxiety did not affect or reduce the sensitivity to the recognition of facial emotions. To reconcile these results, here we investigated whether the effect of individual anxiety on the recognition of facial emotions is dependent on the emotion category and the race of the target faces. We found that, first, there was a significant positive correlation between the individual anxiety level and the recognition sensitivity for angry faces but not for sad or happy faces. Second, while the correlation was significant for both low- and high-intensity angry faces during the recognition of the observer's own-race faces, there was significant correlation only for low-intensity angry faces during the recognition of other-race faces. Collectively, our results suggest that the influence of anxiety on the recognition of facial emotions is flexible depending on the characteristics of the target face stimuli including emotion category and race.

Graphical Abstract

Keywords: Anxiety, Facial emotion, Emotion recognition, Race

INTRODUCTION

Face perception is a basis of our social interactions. Especially, the recognition of facial emotions is essential for the formation of successful interpersonal relationships because we can infer emotional states and communicative intentions of other people from facial emotions [1,2]. Problems in the processing of emotional faces can thus lead to misinterpretations of given social situations and inappropriate behaviors.

A number of studies have suggested that people with psychiatric disorders have an aberrant emotional face processing [3,4]. Especially, participants with high anxiety have shown atypical recognition of negative facial expressions. For instance, high anxiety participants showed a more sensitive recognition of fearful or threatening faces relative to healthy normal participants [5,6,7,8,9,10]. Moreover, some studies also have demonstrated interpretation bias of facial expressions, in which anxious people are more likely to misclassify neutral or ambiguous emotional faces as threatening faces [10,11,12,13,14]. However, there is also evidence that anxiety does not affect or even reduce the sensitivity to the recognition of facial emotions. A high-trait anxious group did not show any difference in the perceptions of facial emotions compared with a low-trait anxious group [15]. Moreover, studies with varying emotional intensities of facial expressions also showed no anxiety-dependent effect [16,17] or only interpretative bias without enhanced sensitivities in the recognition of threatening faces [11,13,14]. Furthermore, other studies have reported even reduced sensitivity to the recognition of threatening faces in high anxiety participants [18,19]. Thus, the influence of anxiety on the recognition of facial emotions is still controversial.

One possible factor underlying the discrepancies between previous anxiety studies may be the effect of different cultural experiences or familiarity with target faces. Despite the fact that basic facial expressions are shared across cultures, detailed interpretations of facial expressions are thought to be cultural-specific [20,21]. Especially, it has been reported that people are better at and more confident in recognizing facial expressions of their own-race faces [22,23,24]. Hunter et al. also reported that Caucasian participants with high social anxiety were more accurate at identifying overall facial expressions from their own-race (“in-group”) faces than from other-race (“out-group”) faces [25].

To clarify the general effect of anxiety on the recognition of facial emotions and its interaction with the race of the facial stimuli, we asked participants to perform emotion recognition tasks with varying emotional intensities of angry, happy, and sad faces from three different races. Our data suggest that the influence of anxiety on the recognition of facial emotions depends on the emotional category and race of the face stimuli. A significant positive correlation between the individual anxiety level and the recognition sensitivity was found only for angry faces but not for other emotional category faces. However, while in the recognition of the ingroup (Asian) facial emotions, the correlation between individual anxiety level and sensitivity to angry expression was significantly positive for both low- and high-intensity angry faces, there was a significant correlation only for the low-intensity angry faces in the recognition of the out-group (non-Asian) facial emotions. These results suggest that the influence of anxiety on the recognition of facial emotions is flexible, depending on the characteristics of the face stimuli including emotion category and race.

MATERIALS AND METHODS

42 Asian (Korean) participants (22 females, aged 22.357±2.639 years) took part in the experiment. Exclusion criteria included a history of psychiatric or neurological disorders, pregnancy, hearing problem, and a history of any blood diseases. One additional participant was excluded because she could not finish the experiment due to a claustrophobia-like symptom, which she had never experienced before. Although all participants have never been diagnosed as a patient before, the range of the BAI scores of the participants in the experiment was from 1 to 19 (please see ‘Psychometric tests’ below). This includes not only healthy/minimal (BAI score of 0~7) but also mild (8~15) and moderate (16~25) anxiety level. All participants were right-handed. They provided written informed consent for the procedure in accordance with protocols approved by the KAIST Institutional Review Board.

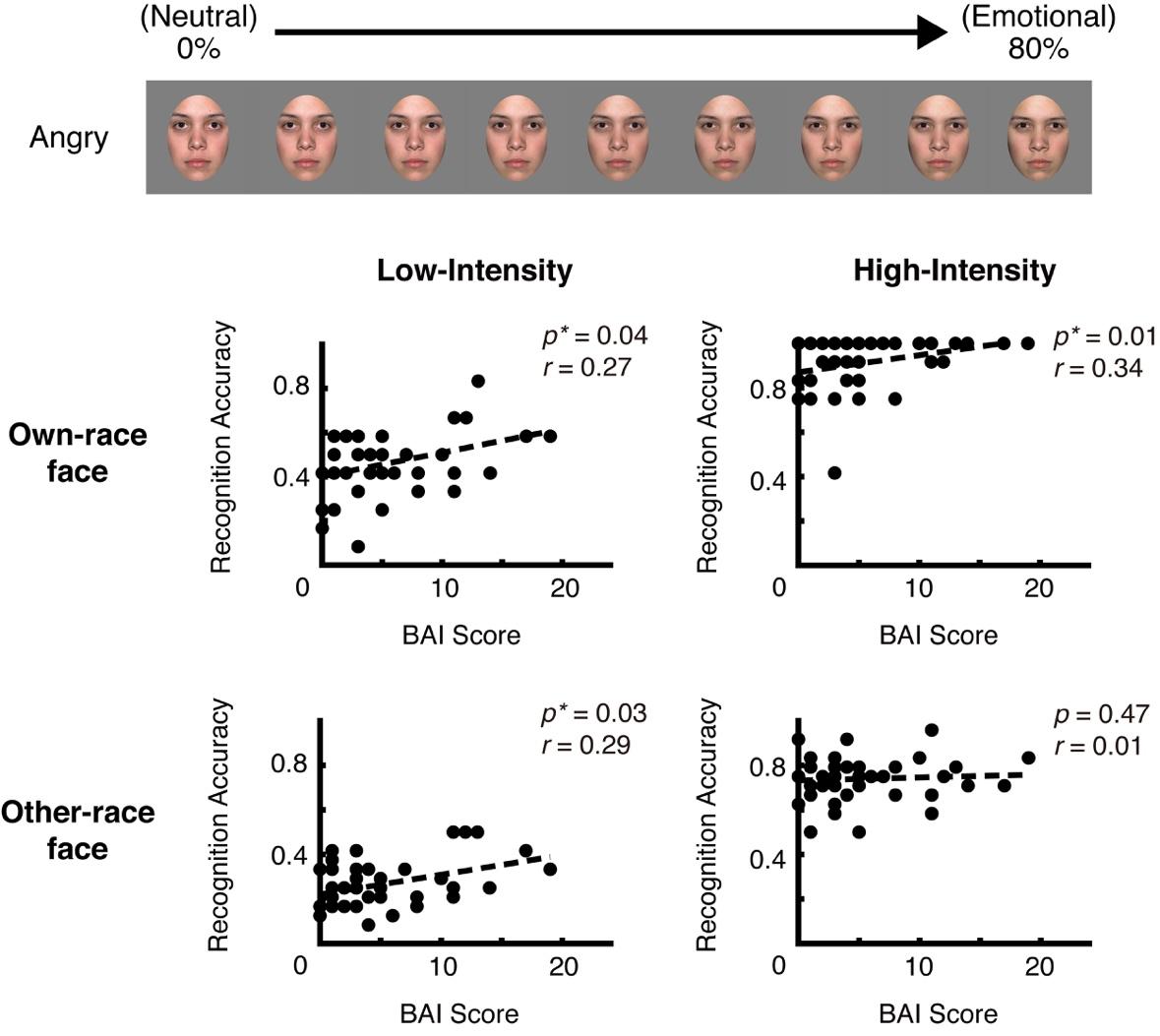

For the Asian face condition experiment, face images from 2 Asian men and 2 Asian women were used, and for the non-Asian face condition experiment, face images from 2 African-American men, 2 African-American women, 2 Caucasian men, and 2 Caucasian women were used. The African American and Caucasian face images were selected from Nimstim Face Stimulus Set (https://www.macbrain.org/resources.htm) [26]. For each face identity, the images of prototypical angry, happy, sad, and neutral facial expression were prepared. For Asian face stimuli, 1 female Asian face image was selected from Nimstim Face Stimuli Set [26] and the other images were obtained by taking pictures of volunteered actors. The volunteers were informed that photos of them will be used only for research and provided a written informed consent. In Fig. 1, the example face images that may be published from Nimstim Face Stimulus Set, were used for illustration purpose only and were not used in the experiment.

For each face identity, a graded stimulus series was generated by morphing faces with one of the prototypical emotional faces (angry, happy, or sad) and neutral face. Thus, a total of 11 intensities, from 0% emotional (neutral) to 100% emotional (prototypical angry, happy, or sad) were prepared for each emotion category of each identity (Fig. 1A). However, to measure the recognition sensitivity for facial emotions, we used only neutral face image and 8 steps of emotional images, starting with 10% emotional and ending with 80% emotional faces, for each emotion category of each identity in the experiment. Face Morpher (https://github.com/alyssaq/face_morpher) and STOIK Morph Man software (http://www.stoik.com/products/video/STOIK-Morph-Man-2016/) were used to morph the face images and the contours of the faces were post-edited with Adobe Photoshop CC 2017. The size of each face image was set to 500×600 pixels.

Participants conducted the emotion recognition task in a sound-attenuated experiment room. The tasks were performed in 2 separate blocks: Asian face condition block and non-Asian face condition block. In the Asian face condition block, only Asian face stimuli were presented while the face stimuli except for Asian faces were used for the non-Asian face condition block.

Each trial of the emotion recognition task consisted of a face phase and a subsequent orientation phase. In the face phase, the participants first saw a face stimulus and indicated their recognition of emotion category by pressing one of the four buttons (1=Angry, 2=Happy, 3=Sad, and 4=Neutral) (Fig. 1B). The participants were instructed to press the button as accurately and quickly as possible with a maximum response time of 2.5 sec. The face image was shown up until the response was recorded. After a 1-sec interval, the orientation phase began. In this phase, the participants were asked to determine the precise orientation of a grating while they were seeing one of four orientation gratings (0°, 45°, 90°, 135°) (Fig. 1B). This orientation phase was inserted to prevent any effect of the previously shown face stimulus on the recognition of the next facial emotion. The participants exhibited strong performance in this orientation phase: 93.744±15.478 % for Asian face condition and 95.359±8.950 % for non-Asian condition. In both phases, each image of the face or grating subtending approximately 8° of visual angle was viewed via a monitor (1920×1080 resolution, 60 Hz refresh rate), and the choice alternatives were displayed under each image.

After the tasks, participants completed K-BAI (Korean version of Beck Anxiety Inventory) and K-BDI-II (Korean version of Beck Depression Inventory) questionnaires (www.koreapsy.co.kr). The BAI and BDI are self-report inventories created by Aaron T. Beck for measuring the severity of anxiety and depression [27,28]. BAI scores in range 0~9 mean minimal anxiety, 10~16 mean mild anxiety, 17~29 mean moderate anxiety and 30~63 mean severe anxiety. BDI scores in range 0~9 indicate minimal depression, 10~18 indicate mild depression, 19~29 indicate moderate depression, and 30~63 indicate severe depression. The range of the BAI scores of the participants was from 1 to 19 (mean=5.26, std.=4.79), and the range of the BDI scores was from 0 to 23 (mean=9.64, std.=5.53).

To investigate the sensitivity of the three different facial emotions, we defined all faces used in the experiment except for the neutral faces as emotional (angry, happy, or sad) faces as a previous study [9]. Thus, the correct answer for the emotionally graded faces (from Step 1 to 8) in the angry, happy, or sad category was angry, happy, or sad, respectively. The percentage of the correct answers for the faces of each emotion category was measured.

To compare the average answer rate for each of the emotions with the chance level (25%), two-tailed t-test was used. Repeated-measures ANOVAs (tests of within-subjects effects) were used to determine the statistical significance of the stimuli race type effects. For all ANOVAs with factors having more than two levels, Greenhouse-Geisser Corrections were used. For the correlation analysis, one-tailed Spearman correlation was used with the assumption of predicted positive direction. Statistical analysis was done with MATLAB and SPSS.

RESULTS

To investigate the recognition of emotional facial expressions, we asked participants to conduct four alternate forced choice tasks in which they judged whether expression of a presented face was angry, happy, sad, or neutral. Each presented face image was one of the graded face images ranging from neutral (0% emotional intensity) to 80% angry, happy or sad expression (100% emotional intensity indicates prototypical angry, happy, or sad expression) (Fig. 1). There were 8 steps of emotional intensity by 10% increase from 10% to 80% for each emotion category (e.g., step 5 of angry face indicates 50% angry face).

We first examined how sensitively the participants detected the Asian, African-American, or Caucasian facial expressions of emotion. The rate of accurate responses (averaged rate across the rates from 8 steps for each emotion category) was greater than chance accuracy in all emotion categories (angry, happy, sad) and all races of face stimuli (all t(41)>6.773, p<0.001) (Fig. 2A), indicating that the participants successfully performed the task. To directly compare the responses between different races of face stimuli in each emotion category, we conducted a two-way ANOVA with races of stimuli (Asian, African-American, Caucasian) and emotion categories (angry, happy, sad) as within-subject factors. This analysis revealed a main effect of races of stimuli (F(1.887, 77.369)=96.049, p<0.001), emotion categories (F(1.862, 76.334)=17.942, p<0.001) and a significant interaction between race and emotion (F(3.584, 146.935)=11.240, p<0.001). A follow-up post-hoc Bonferroni pairwise comparison showed that the recognition accuracy for Asian faces was greater than the accuracy for Caucasian in all emotion categories (p<0.001 for angry, p<0.001 for happy, p<0.001 for sad) (Fig. 2A). In addition, the accuracy for Asian faces was significantly greater than African-American in angry (p<0.001) and happy conditions (p=0.028) (Fig. 2A). Thus, the accuracy for emotional facial expression was better for Asian faces than non-Asian faces. Given that the participants were Asians, this result is consistent with the prior research that people are generally better at the recognition of facial expressions for the faces from in-group than from other groups [22,24].

We also analyzed the recognition accuracy for the faces with relatively low-intensity (faces with 20%, 30%, and 40% emotional intensity) and high-intensity (faces with 60%, 70%, and 80% emotional intensity) emotional expressions separately (Fig. 2B). For both low- and high-intensity emotional faces, we found the same tendency observed in the responses for total faces; a two-way repeated-measure ANOVA with races and emotion categories as factors revealed a main effect of races (F(1.986, 81.422)=93.571, p<0.001 for low-intensity; F(1.886, 77.311)=44.024, p<0.001 for high-intensity), emotion categories (F(1.726, 70.763)=19.077, p<0.001 for low-intensity; F(1.315, 53.909)=25.660, p<0.001 for high-intensity) and significant interaction between race and emotion (F(3.184, 130.534)=12.662, p<0.001 for low-intensity; F(3.160, 129.548)=8.988, p<0.001 for high-intensity). Additionally, the accuracy rate for Asian faces were significantly greater than Caucasian faces in all emotion categories for both low- (Bonferroni post hoc tests, p<0.001 for angry, p<0.001 for happy, p=0.015 for sad) and high-intensity (p<0.001 for angry, p=0.002 for sad) except for high-intensity happy faces (p=0.236). Taken together, these results suggest that the sensitivity to facial emotions for the faces from the participant's own-race is higher than that for other race faces.

To investigate the influence of individual anxiety level on the recognition of emotional facial expressions, the participants were asked to respond to the BAI questionnaire. We derived correlation between the mean accuracy for the facial emotion recognition and BAI scores. Because the participants showed the best performance in the emotional face recognition for Asian faces, we first focused on the responses for Asian faces. We found that the recognition accuracy for angry faces was positively correlated with BAI scores (r=0.375, p=0.007) (Fig. 3A). Moreover, we also derived the correlations between BAI scores and the recognition accuracies for low and high intensity angry faces separately, and found the same tendency for both low and high intensities (r=0.266, p=0.044 for low-intensity; r=0.342, p=0.013 for high-intensity) (Fig. 3B). This tendency was also observed while controlling the effect of age or gender. We conducted partial correlation analyses between individual anxiety level and sensitivity to the recognition of angry expression with the control variables of age or gender, and found the same tendency observed in Fig. 3 (Age controlled: r=0.372, p=0.009 for total intensity; r=0.266, p=0.047 for low-intensity; r=0.347, p=0.013 for high-intensity; Gender controlled: r=0.329, p=0.018 for total intensity; r=0.218, p=0.085 for low-intensity; r=0.307, p=0.026 for high-intensity). The positive relationship between the recognition of facial emotions and the BAI scores was not observed in other emotions (Table 1). Thus, these suggest that during the recognition of Asian facial emotion, the recognition accuracy for angry faces, but not other emotional faces, depends on individual anxiety level.

We next examined the relationship between individual anxiety level and recognition of facial emotion for non-Asian faces. To increase the power of non-Asian face results, we combined the responses to African-American faces and Caucasian faces. In non-Asian face condition, we found statistically significant correlation for low-intensity angry faces (r=0.285, p=0.034), but not for high-intensity angry faces (r=0.010, p=0.474) (Fig. 4). This tendency was also observed while controlling for age (r=0.275, p=0.041 for low intensity; r=-0.059, p=0.644 for high intensity), and similar but weaker tendency was observed while controlling for gender (r=0.248, p=0.059 for low intensity; r=0.066, p=0.340 for high intensities). Additionally, there were no significant correlations between the emotion recognition and the BAI scores for other emotion categories regardless of emotional intensities (Table 2). Thus, these showed that while there was a positive relationship between the BAI scores and the emotion recognition for both low- and high-intensity angry faces in the Asian face condition (Fig. 3), during the recognition of non-Asian facial emotions, the positive relationship was observed only for low-intensity angry faces (Fig. 4).

Since anxiety and depression frequently co-occur [29,30], we also investigated the relationship between the sensitivity to facial expressions and the individual depression level measured by BDI scores (Table 3 and 4). However, we did not find any significant effect of individual depression on the recognition of facial emotions for both Asian (Table 3) and non-Asian faces (Table 4). Additionally, we also investigated the relationship between the sensitivity to facial expressions and the BAI scores while controlling individual BDI scores, and found the same tendency shown in the Fig. 2 and 3 (Table 5). These suggest that the perceptual sensitivity to angry expressions depends on mainly individual anxiety level rather than depression level.

DISCUSSION

Our findings show the flexible effect of anxiety on the recognition of facial expression, depending on the emotion category and the race of the face stimuli. More anxious participants showed a higher sensitivity in the recognition of the angry expression but not other emotional expressions. Moreover, while a significantly positive correlation between individual anxiety level and sensitivity to facial expression was observed for both low- and high-intensity angry faces in the recognition of the in-group (Asian) facial emotions, the correlation was found only for the low-intensity but not high-intensity angry faces in the recognition of the out-group (non-Asian) facial emotions. These results suggest the influence of anxiety on the recognition of facial emotions depends on the characteristics of the face stimuli including emotion category and race.

Prior studies have shown that anxious individuals are particularly sensitive at recognizing the facial expressions of negative emotions [7,8,9,10]. However, there are also reports supporting that individual anxiety did not affect or even reduce the sensitivity to the recognition of facial emotions [15,16,17,18,19]. Moreover, Hunter et al. showed that Caucasian participants with high social anxiety were more accurate in assessing facial emotions than the participants with lower social anxiety especially for the Caucasian face stimuli [25]. Our results extend these findings of previous studies and provide a potential explanation to account for the discrepancies, showing that the relationship between individual anxiety and sensitivity of emotion recognition is flexible depending on the emotion category and the race of the target faces.

Our results also replicate previous research results showing that accuracy in emotion recognition is higher for the faces from members of the same cultural groups (in-group advantage) (Fig. 2) [21]. This different recognition of emotional faces from ingroup members compared to out-group members may interact with the anxiety dependent recognition of facial emotions. In the present study, the participants with higher anxiety more sensitively perceived low-intensity angry faces from both in-group and outgroup members, but this anxiety-dependent sensitivity was observed only for in-group but not out-group faces in high-intensity angry face condition (Fig. 3 and 4). These results are in accordance with a previous study by Gutierrez-Garcia and Calvo showing that social anxiety was associated with increased sensitivity to facial expressions of low-intensity (25%) angry and disgusting faces [9]. Given that high level anxiety is associated with threat-related interpretation bias [31], and that low-intensity angry faces have more neutral face features and less typical angry features in the present study, our result of the low-intensity angry condition may reflect the anxiety-dependent negative bias during perception of facial expressions. In the recognition of high-intensity angry faces, which were generated by fusion of more typical angry features and less neutral features, individual anxiety may affect the recognition of angry expression only when the specificity for angry expressions is high enough as in the case of in-group faces. However, these will need to be further investigated in future work, with the question of what neural mechanism causes the emotional intensity dependent difference between in-group and out-group faces. Additionally, although the emotionally graded face images were automatically generated by morphing faces with one of the prototypical emotional faces and neutral face [8,9,10], it is still possible that the individual prototypical emotional faces have a variable level of emotional expressions. Also, since our results suggest a face stimulus dependent effect of anxiety for Asian participants, further investigation will need to be done on whether people of other races also show the same effect.

Anxiety disorders are considered as a diverse group of conditions; the pathological forms of anxiety vary from patient to patient [32,33]. Additionally, anxiety disorders are also frequently accompanied by other mental disorders such as depression [29,30,34]. Therefore, this heterogeneity of anxiety disorder may be also one of the factors underlying the discrepancies on the sensitivity to facial emotions between previous patient studies. Our data shows the positive relationship between individual anxiety level and sensitivity to angry expression for the participants in normal and mild-anxiety range. Thus, these support that the increase of recognition sensitivity observed in some prior patient studies is not a distinctive feature of certain specific anxiety patient groups but is a general effect of individual anxiety level.

Based on the reports of the comorbidity of anxiety and depression [29,30,34] and that both anxiety and depression involve aberrant processing in facial emotion recognition [4], we additionally examined whether the sensitivity of facial emotion recognition also depends on individual depression level. However, our data did not show any effect of individual depression on the recognition of angry faces (Table 3, 4 and 5). Thus, at least a partially separated mechanism of anxiety from that of depression may induce the differential recognition of facial expressions. While some previous studies suggest that patients with anxiety or depression generally have aberrant facial emotion recognition [4], there are also reports supporting distinct effect between anxiety and depression. According to Joormann and Gotlib (2006), depressed patients showed aberrant emotional processing for happy faces, while patients with anxiety disorder showed abnormal processing for angry faces [7]. Other studies have shown a particular emotion category is primarily affected by high anxiety or depression level [5,6,8,9,10,11,12,13,35,36]. Additionally, the distinct effect between anxiety and depression in fear generalization have also been reported [37]. Future research may need to reveal the detailed mechanism underlying the different process of anxiety and depression.

In conclusion, the present study shows that sensitivities to emotional faces were positively correlated with individual anxiety level. This tendency, however, was relative to the emotion category and the race of the facial stimuli. During the recognition of the ingroup (Asian) faces, the angry face detection was correlated with individual anxiety level regardless of emotional intensity, whereas during the recognition of the out-group (non-Asian) faces, the sensitivity to only low-intensity angry faces was correlated with anxiety. This correlation was not observed for other emotional faces. These results suggest that there are stimulus-dependent flexible effects of individual anxiety on the recognition of facial emotions.

Figures

Tables

References

- Horstmann G. What do facial expressions convey: feeling states, behavioral intentions, or action requests?. Emotion 2003;3:150-166.

- Seidel EM, Habel U, Finkelmeyer A, Schneider F, Gur RC, Derntl B. Implicit and explicit behavioral tendencies in male and female depression. Psychiatry Res 2010;177:124-130.

- Bourke C, Douglas K, Porter R. Processing of facial emotion expression in major depression: a review. Aust N Z J Psychiatry 2010;44:681-696.

- Demenescu LR, Kortekaas R, den Boer JA, Aleman A. Impaired attribution of emotion to facial expressions in anxiety and major depression. PLoS One 2010;5:e15058.

- Surcinelli P, Codispoti M, Montebarocci O, Rossi N, Baldaro B. Facial emotion recognition in trait anxiety. J Anxiety Disord 2006;20:110-117.

- Doty TJ, Japee S, Ingvar M, Ungerleider LG. Fearful face detection sensitivity in healthy adults correlates with anxiety-related traits. Emotion 2013;13:183-188.

- Joormann J, Gotlib IH. Is this happiness I see? Biases in the identification of emotional facial expressions in depression and social phobia. J Abnorm Psychol 2006;115:705-714.

- Frenkel TI, Bar-Haim Y. Neural activation during the processing of ambiguous fearful facial expressions: an ERP study in anxious and nonanxious individuals. Biol Psychol 2011;88:188-195.

- Gutiérrez-García A, Calvo MG. Social anxiety and threat-related interpretation of dynamic facial expressions: sensitivity and response bias. Pers Individ Dif 2017;107:10-16.

- Yoon KL, Yang JW, Chong SC, Oh KJ. Perceptual sensitivity and response bias in social anxiety: an application of signal detection theory. Cognit Ther Res 2014;38:551-558.

- Winton EC, Clark DM, Edelmann RJ. Social anxiety, fear of negative evaluation and the detection of negative emotion in others. Behav Res Ther 1995;33:193-196.

- Richards A, French CC, Calder AJ, Webb B, Fox R, Young AW. Anxiety-related bias in the classification of emotionally ambiguous facial expressions. Emotion 2002;2:273-287.

- Heuer K, Lange WG, Isaac L, Rinck M, Becker ES. Morphed emotional faces: emotion detection and misinterpretation in social anxiety. J Behav Ther Exp Psychiatry 2010;41:418-425.

- Bell C, Bourke C, Colhoun H, Carter F, Frampton C, Porter R. The misclassification of facial expressions in generalised social phobia. J Anxiety Disord 2011;25:278-283.

- Cooper RM, Rowe AC, Penton-Voak IS. The role of trait anxiety in the recognition of emotional facial expressions. J Anxiety Disord 2008;22:1120-1127.

- Jusyte A, Schönenberg M. Threat processing in generalized social phobia: an investigation of interpretation biases in ambiguous facial affect. Psychiatry Res 2014;217:100-106.

- Philippot P, Douilliez C. Social phobics do not misinterpret facial expression of emotion. Behav Res Ther 2005;43:639-652.

- Montagne B, Schutters S, Westenberg HG, van Honk J, Kessels RP, de Haan EH. Reduced sensitivity in the recognition of anger and disgust in social anxiety disorder. Cogn Neuropsychiatry 2006;11:389-401.

- Jarros RB, Salum GA, Belem da Silva CT, Toazza R, de Abreu Costa M, Fumagalli de Salles J, Manfro GG. Anxiety disorders in adolescence are associated with impaired facial expression recognition to negative valence. J Psychiatr Res 2012;46:147-151.

- Ekman P, Friesen WV, O'Sullivan M, Chan A, Diacoyanni-Tarlatzis I, Heider K, Krause R, LeCompte WA, Pitcairn T, Ricci-Bitti PE, Scherer K, Tomita M, Tzavaras A. Universals and cultural differences in the judgments of facial expressions of emotion. J Pers Soc Psychol 1987;53:712-717.

- Elfenbein HA, Ambady N. Universals and cultural differences in recognizing emotions. Curr Dir Psychol Sci 2003;12:159-164.

- Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol Bull 2002;128:203-235.

- Beaupré MG, Hess U. An ingroup advantage for confidence in emotion recognition judgments: the moderating effect of familiarity with the expressions of outgroup members. Pers Soc Psychol Bull 2006;32:16-26.

- Dailey MN, Joyce C, Lyons MJ, Kamachi M, Ishi H, Gyoba J, Cottrell GW. Evidence and a computational explanation of cultural differences in facial expression recognition. Emotion 2010;10:874-893.

- Hunter LR, Buckner JD, Schmidt NB. Interpreting facial expressions: the influence of social anxiety, emotional valence, and race. J Anxiety Disord 2009;23:482-488.

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson C. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res 2009;168:242-249.

- Beck AT, Steer RA, Brown GK. Manual for the beck depression inventory-II. San Antonio, TX: Psychological Corporation, 1996.

- Beck AT, Epstein N, Brown G, Steer RA. An inventory for measuring clinical anxiety: psychometric properties. J Consult Clin Psychol 1988;56:893-897.

- Gorman JM. Comorbid depression and anxiety spectrum disorders. Depress Anxiety 1996-1997;4:160-168.

- Pollack MH. Comorbid anxiety and depression. J Clin Psychiatry 2005;66:22-29.

- Beard C. Cognitive bias modification for anxiety: current evidence and future directions. Expert Rev Neurother 2011;11:299-311.

- Craske MG, Stein MB, Eley TC, Milad MR, Holmes A, Rapee RM, Wittchen HU. Anxiety disorders. Nat Rev Dis Primers 2017;3:17024.

- Coelho HF, Cooper PJ, Murray L. A family study of co-morbidity between generalized social phobia and generalized anxiety disorder in a non-clinic sample. J Affect Disord 2007;100:103-113.

- Regier DA, Rae DS, Narrow WE, Kaelber CT, Schatzberg AF. Prevalence of anxiety disorders and their comorbidity with mood and addictive disorders. Br J Psychiatry Suppl 1998;173:24-28.

- Surguladze SA, Young AW, Senior C, Brébion G, Travis MJ, Phillips ML. Recognition accuracy and response bias to happy and sad facial expressions in patients with major depression. Neuropsychology 2004;18:212-218.

- Yoon KL, Joormann J, Gotlib IH. Judging the intensity of facial expressions of emotion: depression-related biases in the processing of positive affect. J Abnorm Psychol 2009;118:223-228.

- Park D, Lee HJ, Lee SH. Generalization of conscious fear is positively correlated with anxiety, but not with depression. Exp Neurobiol 2018;27:34-44.