Articles

Article Tools

Stats or Metrics

Article

Short Communication

Exp Neurobiol 2023; 32(2): 102-109

Published online April 30, 2023

https://doi.org/10.5607/en23004

© The Korean Society for Brain and Neural Sciences

Caenorhabditis elegans Connectomes of both Sexes as Image Classifiers

Changjoo Park* and Jinseop S. Kim*

Department of Biological Sciences, Sungkyunkwan University, Suwon 16419, Korea

Correspondence to: *To whom correspondence should be addressed.

Changjoo Park, TEL: 82-31-290-7084, FAX: 82-31-290-7015

e-mail: cjpark147@skku.edu

Jinseop S. Kim, TEL: 82-31-290-7014, FAX: 82-31-290-7015

e-mail: jinseopskim@skku.edu

This is an Open Access article distributed under the terms of the Creative Commons Attribution Non-Commercial License (http://creativecommons.org/licenses/by-nc/4.0) which permits unrestricted non-commercial use, distribution, and reproduction in any medium, provided the original work is properly cited.

Abstract

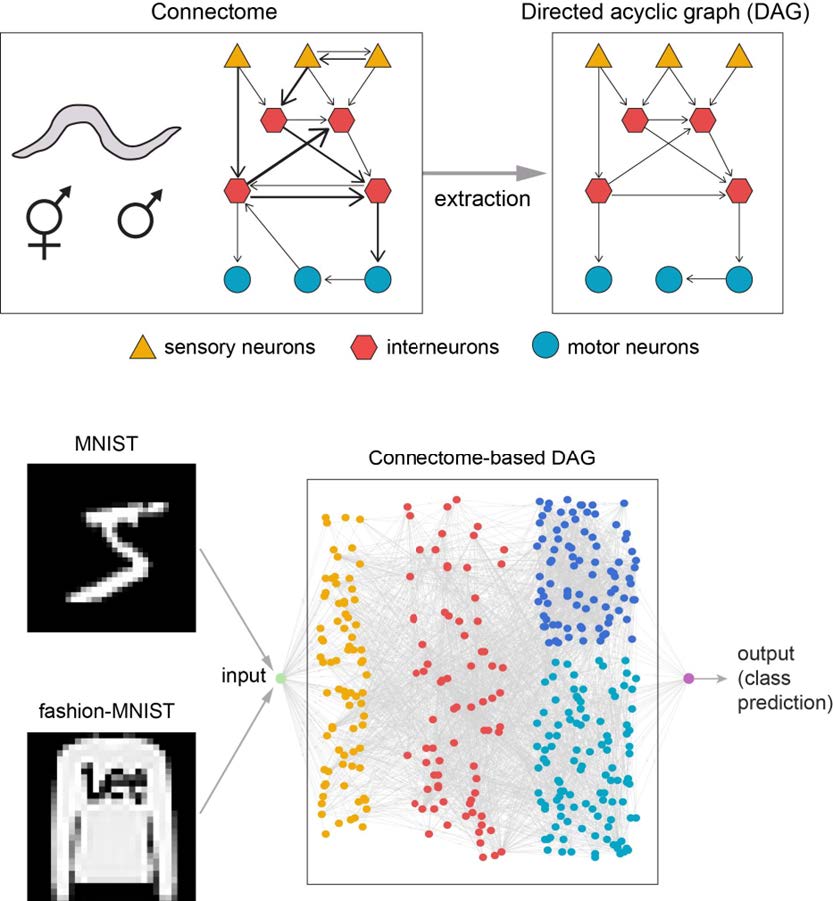

Connectome, the complete wiring diagram of the nervous system of an organism, is the biological substrate of the mind. While biological neural networks are crucial to the understanding of neural computation mechanisms, recent artificial neural networks (ANNs) have been developed independently from the study of real neural networks. Computational scientists are searching for various ANN architectures to improve machine learning since the architectures are associated with the accuracy of ANNs. A recent study used the hermaphrodite

Graphical Abstract

Keywords: Connectome, Artificial neural network,

INTRODUCTION

The nodes in ANNs are highly simplified biological neurons, and the networks with such nodes can perform learning by adjusting the synaptic weights of the edges [1]. While the ANNs partially mimic biological learning, usually their network architecture is artificially designed in regular patterns or searched by computer algorithms [2-5]. The architecture of ANNs matters because it is the streambed for neural information flow such as aggregation and distribution.

The purpose of this study is to explore ANN architectures inspired by biological neural networks, especially the connectome. A connectome is the complete wiring diagram of all the synaptic connections between the entire neurons of an animal [6] and thus it is the biological basis of any mental process and behavior. We construct an ANN using the

Many experiments have demonstrated that the ANN architecture is associated with accuracy, specifically in image classification tasks. The convolutional neural networks (CNNs) outperform the multilayer perceptrons [8]. The success of deep learning is attributed to the fact that accuracy improves for the networks with more hidden layers [9, 10]. In addition, skip connections between distant layers, which mimic biological long-range feedforward connections, improve accuracy further [11, 12].

Another plausible way of constructing a network architecture is to refer to the biological networks. In a recent study, a connectome-based modeling of ANN architecture was explored using hermaphrodite

As an expansion of the previous study, here we test whether more realistic biological network architecture yields higher accuracy. We model connectome-based ANN architectures preserving the neuronal types and edge directions. We also compare the two sexes of

MATERIALS AND METHODS

Data source

We used the data from WormWiring, a website hosting the nematode connectome data mapped from electron microscope images [13]. We downloaded the latest versions of structural connectomes for both hermaphrodite and adult male

The chemical connectome is represented by a weighted directed graph, where the edge weights are the number of synapses between a pair of neurons and the edge directions are the direction of synapse pointing from pre- to postsynaptic neuron. The data are provided in MATLAB’s graph data structure together with metadata. In the metadata, each neuron was given a unique name and was classified into one of the five types (sensory, interneuron, motor, sex-specific, or other), where the sensory type information is utilized in our method as described later. After removal of electrical synapses and isolated neurons, 282 nodes of neurons and 3,567 edges of synaptic connections remained in the graph of hermaphrodite connectome, while 357 nodes and 3,889 edges remained in the graph of male connectome.

Extraction of DAG and network topology from connectome

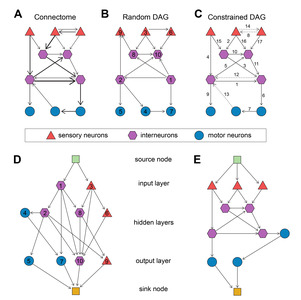

The original

A directed acyclic graph (DAG) can be obtained by removing cyclic connections from a graph (Fig. 1A~C). In the previous study, a random DAG is obtained from the connectome by randomly assigning indices to all the nodes and then setting the edges to point from smaller-index nodes to larger-index nodes (Fig. 1A, B). Then, the topology of the neural network is determined by adding two nodes to the DAG: the source node connecting to the input layer nodes and the sink node connected by the output layer nodes, which ensure singular input and output through the DAG graph (Fig. 1D). Here, 0-in-degree nodes are considered as the input layer and 0-out-degree nodes are considered as the output layer. The source node copies the input and distributes it to the input layer nodes and the sink node performs average on the output layer nodes of the DAG [7, 17]. However, this procedure severely disorients the information flow of the connectome because the determination of edge direction is arbitrary.

In this study, we aimed to construct a new network topology which preserves the flow of information of the connectome. A constrained DAG is obtained by the procedure which sets all and only the sensory neurons to the input layer and maximally retains the number and direction of the edges. We extract a DAG from the connectome G with the following steps. Let the set of all the neurons in

1. Get all the nodes of sensory neurons from

2. Search G to find all the “unused” nodes which are connected by nodes in

3. Repeat step 2 until the flags of all the neurons are “used”.

4. Let

Contrary to the case of random DAG, the layer of

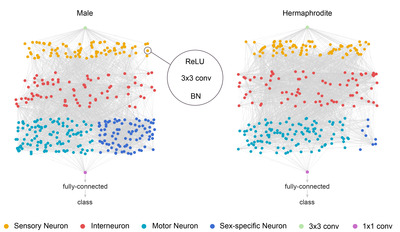

The hermaphrodite DAG has a total of 282 nodes and 2,260 edges and the male DAG has a total of 357 nodes and 2,494 edges, except the source and the sink nodes. These networks were used as the basis of the neural network architectures as in the following section.

Network architecture

We construct the neural network architecture from the network topology identically to the previous study (Fig. 2) [7]. The source node is a 2D convolution with kernel size of 3, stride size of 2 and padding of 1. All the DAG nodes are assigned with the identical processing units: rectified linear unit (ReLU) and convolution followed by batch normalization. The convolution operation is performed by 78 parallel kernels each of which is 2D convolution with kernel size of 3, stride size of 1 and padding of 1. The outputs of every node are passed onto next nodes following the edge directions in the DAG structure. Since all the nodes except the source nodes can receive inputs from multiple nodes, the inputs to each node were aggregated by taking a weighted sum. The outputs of output nodes are averaged and fed into the sink node. The sink node is a 2D convolution with kernel size of 1 and stride size of 1, followed by a fully connected layer for class decision. The networks were implemented and trained using Torch framework with its Python interface adopted from the previous study [7].

Training and evaluation

We trained and evaluated the two networks on MNIST and fashion-MNIST datasets [18, 19]. The MNIST dataset consists of 28×28-pixel grayscale images of hand-written digits. There are 60,000 images for training and 10,000 images for tests, and the number of images for each digit are the same. The fashion-MNIST dataset contains images of fashion items such as clothes, bags, and shoes, whose dimensions and numbers are the same as the MNIST images. Following the conventional preprocessing, each image was normalized with the mean value of 0.5 and standard deviation of 1. No data augmentation was performed. For consecutive training iterations, the network was fed with a batch of 100 images sequentially taken from the training set. The error measure was the negative loss likelihood. With the ADAM optimizer [20], the learning rate was initially set to 0.004 and was multiplied by 0.1 every 10,000 iterations. After each training epoch, the training dataset was reshuffled for upcoming iterations to prevent the network from learning particular patterns in the sequence of image feed.

RESULTS

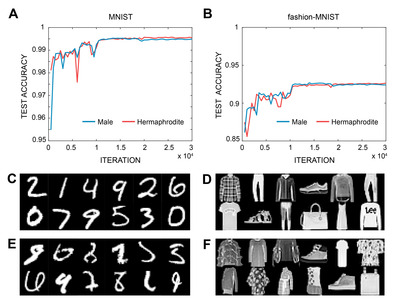

The accuracy of the image classification of the two networks was measured for the test sets. For the MNIST classification task, the hermaphrodite network showed 99.6% and the male network showed 99.5% accuracy after roughly 15,000 iterations (Fig. 3A). For the fashion MNIST, the hermaphrodite network showed 92.7% and the male network showed 92.6% accuracy after roughly 15,000 iterations (Fig. 3B).

The error investigation reveals that most of the examples of misclassified MNIST digit images are even more difficult to recognize for a human compared to the correctly classified digit images (Fig. 3C, E). Similarly, the misclassified fashion images are less clear than the correctly classified images (Fig. 3D, F). However, not all the misclassified fashion images are unrecognizable to a human, which suggests that the classification of fashion MNIST is more difficult than that of digit MNIST to ANNs because of the complexity of the images.

The two networks exhibited similar accuracy, less than 0.1% point of accuracy difference, for both tasks. The difference in the connectomes of the two sexes is chiefly the number of sex neurons and network topologies are almost identical (Fig. 2) [14]. This may suggest that the role of the sex neurons is only minor in image classification because the sex neurons of male form an extra network added to the neural network of hermaphrodite and exist on the outside of the main path of information flow.

These networks achieved higher accuracy than the networks which were based on the random DAG did (Table 1). The accuracy of our networks is higher than the reported mean accuracy values of the random DAG network by 0.6% point on MNIST and by 2.7% point on fashion MNIST. Since there is no other significant difference in the training procedures, we attribute the higher accuracy to the method of constructing the DAG from the connectome, preserving the structure of information flow. In addition, the MNIST accuracy of our networks with 0.21 million adjustable parameters is comparable to the previous studies by a 2-layer convolutional neural network with 0.13 million parameters and by a 6-layer deep neural network with 12 million parameters [21, 22]. In the previous study, the accuracy can have a range of values due to the randomness of the DAG, but unfortunately the range was not given in the manuscript numerically but only an error bar is given on a plot. Except for the random DAG network, the rest of the networks applied to MNIST, including those in Table 1, present only a single value of accuracy because the tests are performed on a fixed set of data. Since the goal of these studies is to maximize the accuracy within the fixed set of test data, their performances can only be evaluated by the single numbers without error bars and the quality of those works are appreciated as given by the numbers.

DISCUSSION

We showed that the neural networks, constructed from

The biologically-inspired ANN in this study is a variance of the conventional CNNs whose architecture is regular, as it utilizes the same convolution operations but with different network architecture which is irregular. The architecture of typical CNNs is composed of the layers consisting of similar numbers of nodes, and the nodes in adjacent layers are fully connected so that the number of connections of the nodes in a layer are the same. In recent studies, the “skip connections” are employed to connect nodes between distant layers which are also regular. On the other hand, the network in this study has highly varying number of nodes for different layers with heterogeneous numbers of connections. The skip connections, designed by nature, are also irregular and more frequent than the case of the typical CNNs. While the irregular architecture increases the computational cost on both running time and memory, the frequent skip connections can partly diminish the problem and help prevent the “vanishing gradient” problem in the learning algorithms [24]. Note that the computational cost may not be intrinsic to the biological networks, but it may be because the biological computation is performed on the machine with different framework.

We also note that the nodes used in this study are all identical and the edge weights of a single node can have arbitrary, either positive or negative values. This is the common setting for most of the ANNs. The positive and negative connection weights correspond to excitatory or inhibitory synaptic connections of biological networks. However, generally in biological networks, the neurons by themselves are excitatory or inhibitory, which means that all the output synaptic connections from a single neuron are either all excitatory or all inhibitory. It is not clear whether the natural selection of excitatory and inhibitory neurons over excitatory and inhibitory synapses was to improve computational efficiency or to compromise with biological limitations. The introduction of excitatory and inhibitory nodes to ANNs, whose output weights are all positive or all negative, may lead to interesting results. More generally, utilization of various types of neurons, such as the interneurons commonly found in the biological networks, may be critical in improving the performance of ANNs. For instance, a large part of the success of CNNs is attributed to the employment of the convolution and pooling operations, respectively performing filtering and down-sampling which imitate the simple and complex cells first discovered in the visual cortex of a cat [25]. Likewise, extensive surveillance is required to examine various computational components which mimics various functions of the biological neurons as a revolutionary advancement of ANNs.

The early AIs, particularly the ANNs, had advanced under the inspiration of neuroscience, mimicking the functional mechanisms of biological neurons and neural networks. Although such a relation appears to have become vague recently, the inspiration of neuroscience is still crucial for the advancement of AI. If the goal of an AI is to solve a specific engineering problem, any solution that serves the goal may be acceptable and it is not mandatory for AIs to imitate the mechanisms of biological neural systems. Nevertheless, finding the solution from the vast search space is so difficult that the hints from neuroscience will greatly reduce the search space and accelerate the search speed. The same argument can be made to the design of network architecture as suggested in this study [23].

This study also showcases that biological networks can be used for the architecture of ANNs. This may suggest that the biological networks, which have evolved specifically to serve the computational demand for the survival of an organism, have the plasticity that is capable of general neural computation.

ACKNOWLEDGEMENTS

This research was supported by KBRI Basic Research Program (21-BR-03-01) funded by the Korean Ministry of Science and ICT. C.P. acknowledges the support by the BK21 FOUR program through NRF funded by the Korean Ministry of Education.

AUTHOR CONTRIBUTION

C.P. and J.S.K. designed the project, interpreted the data, and wrote the paper. C.P. wrote the codes and performed the experiments. J.S.K. supervised the work.

Figures

Tables

Accuracy of different network types for MNIST image classification. The accuracy of the network in this study based on the constrained DAG is superior to the case of the random DAG and is comparable to a few deep neural networks with common architecture

| Network type | Accuracy | Reference |

|---|---|---|

| Random DAG from | 99% | [7] |

| Random DAG from Watts-Strogatz model | 99.3% | [7] |

| Convolutional neural network* | 99.6% | [21] |

| Constrained DAG from | 99.6% | This work |

| Deep neural network* | 99.6% | [22] |

*Data augmentation was applied to the images before feeding to the networks.

References

- Rosenblatt F (1962) Principles of neurodynamics: perceptrons and the theory of brain mechanisms. Spartan Books, Washington, D.C

- Zoph B, Le QV (2017) Neural architecture search with reinforcement learning. arXiv. doi: 10.48550/arXiv.1611.01578

- Zoph B, Vasudevan V, Shlens J, Le QV (2018) Learning transferable architectures for scalable image recognition. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (Mortensen E, Brendel W, eds), pp 8697-8710. IEEE, Piscataway, NJ

- Pham H, Guan M, Zoph B, Le QV, Dean J (2018) Efficient neural architecture search via parameters sharing. PMLR 80:4095-4104

- Luo R, Tian F, Qin T, Chen E, Liu TY (2018) Neural architecture optimization. In: Advances in Neural Information Processing Systems 31 (NeurIPS 2018) (Bengio S, Wallach H, Larochelle H, Grauman K, Cesa-Bianchi N, Garnett R, eds), pp 7816-7827. NeurIPS, La Jolla, CA

- Abbott LF, Bock DD, Callaway EM, Denk W, Dulac C, Fairhall AL, Fiete I, Harris KM, Helmstaedter M, Jain V, Kasthuri N, LeCun Y, Lichtman JW, Littlewood PB, Luo L, Maunsell JHR, Reid RC, Rosen BR, Rubin GM, Sejnowski TJ, Seung HS, Svoboda K, Tank DW, Tsao D, Van Essen DC (2020) The mind of a mouse. Cell 182:1372-1376

- Roberts N, Yap DA, Prabhu VU (2019) Deep connectomics networks: neural network architectures inspired by neuronal networks. arXiv. doi: 10.48550/arXiv.1912.08986

- LeCun Y, Kavukcuoglu K, Farabet C (2010) Convolutional networks and applications in vision. In: Proceedings of 2010 IEEE International Symposium on Circuits and Systems (Ea T, Belleville M, eds), pp 253-256. IEEE, Piscataway, NJ

- Krizhevsky A, Sutskever I, Hinton GE (2012) ImageNet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems 25 (NIPS 2012) (Pereira F, Burges CJ, Bottou L, Weinberger KQ, eds), pp 1097-1105. NeurIPS, La Jolla, CA

- Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 1-9. IEEE, Piscataway, NJ

- He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 770-778. IEEE, Piscataway, NJ

- Lee K, Zung J, Li P, Jain V, Seung HS (2017) Superhuman accuracy on the SNEMI3D connectomics challenge. arXiv. doi: 10.48550/arXiv.1706.00120

- Emmons Lab (2020) WormWiring: nematode connectomics [Internet]. Albert Einstein College of Medicine, Bronx, NY.

Available from: https://www.wormwiring.org/pages/adjacency.html - Cook SJ, Jarrell TA, Brittin CA, Wang Y, Bloniarz AE, Yakovlev MA, Nguyen KCQ, Tang LT, Bayer EA, Duerr JS, Bülow HE, Hobert O, Hall DH, Emmons SW (2019) Whole-animal connectomes of both Caenorhabditis elegans sexes. Nature 571:63-71

- Sherstinsky A (2020) Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. Physica D Nonlinear Phenom 404:132306

- Pascanu R, Mikolov T, Bengio Y (2013) On the difficulty of training recurrent neural networks. PMLR 28:1310-1318

- Xie S, Kirillov A, Girshick R, He K (2019) Exploring randomly wired neural networks for image recognition. In: the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp 1284-1293. IEEE, Piscataway, NJ

- Lecun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86:2278-2324

- Xiao H, Rasul K, Vollgraf R (2017) Fashion-MNIST: a novel image dataset for benchmarking machine learning algorithms. arXiv. doi: 10.48550/arXiv.1708.07747

- Kingma DP, Ba JL (2017) Adam: a method for stochastic optimization. arXiv. doi: 10.48550/arXiv.1412.6980

- Simard PY, Steinkraus D, Platt JC (2003) Best practices for convolutional neural networks applied to visual document analysis. In: the 7th International Conference on Document Analysis and Recognition, pp 958-963. IEEE, Piscataway, NJ

- Cireşan DC, Meier U, Gambardella LM, Schmidhuber J (2010) Deep, big, simple neural nets for handwritten digit recognition. Neural Comput 22:3207-3220

- Hassabis D, Kumaran D, Summerfield C, Botvinick M (2017) Neuroscience-inspired artificial intelligence. Neuron 95:245-258

- Panigrahi A, Chen Y, Kuo CCJ (2018) Analysis on gradient propagation in batch normalized residual networks. arXiv. doi: 10.48550/arXiv.1812.00342

- Hubel DH, Wiesel TN (1962) Receptive fields, binocular interaction and functional architecture in the cat's visual cortex. J Physiol 160:106-154